In the impending cloud IT era, we have come to heard of many breakthrough technologies and trends which seemed unthinkable few years back. Docker touted as the next 'big thing' of this era stands well above the rest.

But what is Docker? Why is it so popular? Well, I think the next few posts which I will deliver may aid you to gain a sound understanding about Docker's concepts and its roots.

Docker is simply an open-source containerization engine which automates the packaging, shipping and deployment of any software application presented in the form of lightweight, portable and isolated but highly elegant and self-sufficient containers. Hence, the bottom-line "build once, configure once and run anywhere" is well highlighted. Docker simply makes the containerization paradigm easy to grasp, utilize and achieve the intended objectives quickly with less hassle.

When considering further, I have found out that the new users of Docker find it a little confusing in understanding and remembering the concepts associated. Primarily, Docker uses a client-server architecture to serve its purpose. Well, here is a brief coverage of Docker concepts - its components and elements.

- Docker client - this is simply the user interface which enables communication between the Docker daemon and the user, in order to carry out container management. It uses either RESTful API or sockets for communication. The Docker client is capable of connecting with a Docker daemon residing in the same host as it resides or can communicate with a remote Docker daemon, also.

- Docker daemon - this is the process that carries out container management and resides in the host machine. As mentioned in the case of Docker client, the communication between the user and the daemon does not take place directly, but only through the client. Docker daemon requires root privileges to run where as the Docker client does not need such privileges.

The following architecture diagram may provide a clear picture of what Docker offers:

| Docker architecture diagram |

- Docker image - this is simply a read-only template structure that helps in providing directions on building and launching Docker containers. These directions may be configuration data, processes that are to run within the container or etc. An image primarily consists of a layered structure and union file systems are responsible for combining these layers into a single Docker image. An image starts with another image, popularly known as a base image. A template is created with the use of instructions defined in a file called, the Dockerfile. Each instruction will add a new layer to the image. You can push an image you have created into the public Docker registry, which is explained in the next section. Docker image is the build component of Docker.

|

| Docker image architecture diagram |

- Docker registry - this acts as the storage for Docker images. The publicly available Docker repository commonly known as, the Docker Hub in the Docker community plays host to repositories which contain publicly available images (created by others before) downloadable freely and also to private repositories which have access rights only to users with required permissions. In other terms, public repositories are searchable and re-usable. Private repositories come at a fee. You have to register in the Docker Hub to push your own images. This also means that Docker has provided a version management system similar to Git to distribute the images. In addition, Docker does maintain a local registry which holds the images you download (pull) and create. Docker registries play the part of being the distribution component of Docker.

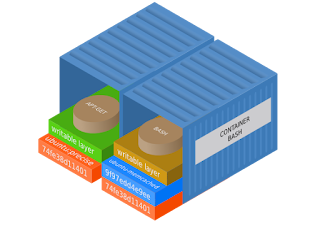

- Docker container - is similar to a bucket or in computing terms, a directory which holds everything needed for an application to run inside it such as, configuration files, scripts, libraries and etc. ensuring an isolated environment. As depicted in the Docker architecture diagram above, containers running inside a single host are completely isolated from not only each other, but the host machine, as well. As mentioned before, a Docker image provides the directions required to create and bootstrap a container. The primary idea is to maintain the homogeneity of the application's behavior in the development environment as well as after being shipped to the deployment environment. Docker uses union file systems within containers as this is a layered file system. It is possible to configure the container to run a different types of file system, as well. The layers of union file systems are read-only and the processes running inside the container can only witness the merger of these layers. When a container is launched, it creates a read-write layer on top of the Docker image. A running process can make a change in the file system thus creating a new layer, which will represent the difference between the current state and the original state. A Docker container is the running component of Docker.

|

| Docker container architecture |

The above sections provides a non-exhaustive depiction of what Docker and its sub-components are. When analyzing further, I could point out the following non-exhaustive set of advantages of Docker as reasons for its successful arrival in the scene.

- Faster delivery of applications.

- Fast and elegant isolation framework.

- Lower resource usage overhead (CPU/memory)

- Easy to acquire and use.

- Advantages over virtualization technology which will be discussed in a future post, more exhaustively.

- operating system level virtualization

- lightweight nature of containers

- native performance

- real-time provisioning due to Docker's speed and scalability

In simple if you are to run an application using Docker what you have to do is,

- Write a Dockerfile which contains the required instructions to generate a Docker image for running the desired application.

- Build the Docker image. using the Dockerfile. The command to build the Docker image can be invoked using the Docker daemon through the Docker client. The built Docker image will be added to your local Docker image registry and if you intend, you can push the Docker image to a Docker Hub's public or private repository.

- Run the generated Docker image in the form of a Docker container, after the allocation of appropriate network and IP address. The command to run the Docker image can be invoked using the Docker daemon through the Docker client.

It is as simple as that!!! But still I think the above summary is quite non-exhaustive. There are more facts associated with these concepts. Hence, I am intending of moving in-depth into these associated concepts, one-by-one in the future posts.

Before concluding, I would like to mention that any comments, suggestions and ideas with regards to the topic under discussion are highly appreciated.

References:

- Orchestrating Docker by Shrikrishna Holla

- Learning Docker by Pethuru Raj, Jeeva S. Chelladhurai, Vinod Singh